If you’ve spent enough time developing for the web, this piece of feedback has landed in your inbox since time immemorial:

“This photo looks blurry. Can we replace it with a better version?”

Every time this feedback reaches me, I’m inclined to question it: “What about the photo looks bad to you, and can you tell me why?”

That’s a somewhat unfair question to counter with. The complaint is rooted in a subjective perception of image quality, which in turn is influenced by many factors. Some are technical, such as the export quality of the image or the compression method (often lossy, as is the case with JPEG-encoded photos). Others are more intuitive or perceptual, such as content of the image and how compression artifacts mingle within. Perhaps even performance plays a role we’re not entirely aware of.

Fielding this kind of feedback for many years eventually lead me to design and develop an image quality survey, which was my first go at building a research project on the web. I started with twenty-five photos shot by a professional photographer. With them, I generated a large pool of images at various quality levels and sizes. Images were served randomly from this pool to users who were asked to rate what they thought about their quality.

Results from the first round were interesting, but not entirely clear: users seemed to have a tendency to overestimate the actual quality of images, and poor performance appeared to have a negative impact on perceptions of image quality, but this couldn’t be stated conclusively. A number of UX and technical issues made it necessary to implement important improvements and conduct a second round of research. In lieu of spinning my wheels trying to extract conclusions from the first round results, I decided it would be best to improve the survey as much as possible, and conduct another round of research to get better data. This article chronicles how I first built the survey, and then how I subsequently listened to user feedback to improve it.

Defining the research#section2

Of the subjects within web performance, image optimization is especially vast. There’s a wide array of formats, encodings, and optimization tools, all of which are designed to make images small enough for web use while maintaining reasonable visual quality. Striking the balance between speed and quality is really what image optimization is all about.

This balance between performance and visual quality prompted me to consider how people perceive image quality. Lossy image quality, in particular. Eventually, this train of thought lead to a series of questions spurring the design and development of an image quality perception survey. The idea of the survey is that users are providing subjective assessments on quality. This is done by asking participants to rate images without an objective reference for what’s “perfect.” This is, after all, how people view images in situ.

A word on surveys#section3

Any time we want to quantify user behavior, it’s inevitable that a survey is at least considered, if not ultimately chosen to gather data from a group of people. After all, surveys are perfect when your goal is to get something measurable. However, the survey is a seductively dangerous tool, as Erika Hall cautions. They’re easy to make and conduct, and are routinely abused in their dissemination. They’re not great tools for assessing past behavior. They’re just as bad (if not worse) at predicting future behavior. For example, the 1–10 scale often employed by customer satisfaction surveys don’t really say much of anything about how satisfied customers actually are or how likely they’ll be to buy a product in the future.

The unfortunate reality, however, is that in lieu of my lording over hundreds of participants in person, the survey is the only truly practical tool I have to measure how people perceive image quality as well as if (and potentially how) performance metrics correlate to those perceptions. When I designed the survey, I kept with the following guidelines:

- Don’t ask participants about anything other than what their perceptions are in the moment. By the time a participant has moved on, their recollection of what they just did rapidly diminishes as time elapses.

- Don’t assume participants know everything you do. Guide them with relevant copy that succinctly describes what you expect of them.

- Don’t ask participants to provide assessments with coarse inputs. Use an input type that permits them to finely assess image quality on a scale congruent with the lossy image quality encoding range.

All we can do going forward is acknowledge we’re interpreting the data we gather under the assumption that participants are being truthful and understand the task given to them. Even if the perception metrics are discarded from the data, there are still some objective performance metrics gathered that could tell a compelling story. From here, it’s a matter of defining the questions that will drive the research.

Asking the right questions#section4

In research, you’re seeking answers to questions. In the case of this particular effort, I wanted answers to these questions:

- How accurate are people’s perceptions of lossy image quality in relation to actual quality?

- Do people perceive the quality of JPEG images differently than WebP images?

- Does performance play a role in all of this?

These are important questions. To me, however, answering the last question was the primary goal. But the road to answers was (and continues to be) a complex journey of design and development choices. Let’s start out by covering some of the tech used to gather information from survey participants.

Sniffing out device and browser characteristics#section5

When measuring how people perceive image quality, devices must be considered. After all, any given device’s screen will be more or less capable than others. Thankfully, HTML features such as srcset and picture are highly appropriate for delivering the best image for any given screen. This is vital because one’s perception of image quality can be adversely affected if an image is ill-fit for a device’s screen. Conversely, performance can be negatively impacted if an exceedingly high-quality (and therefore behemoth) image is sent to a device with a small screen. When sniffing out potential relationships between performance and perceived quality, these are factors that deserve consideration.

With regard to browser characteristics and conditions, JavaScript gives us plenty of tools for identifying important aspects of a user’s device. For instance, the currentSrc property reveals which image is being shown from an array of responsive images. In the absence of currentSrc, I can somewhat safely assume support for srcset or picture is lacking, and fall back to the img tag’s src value:

const surveyImage = document.querySelector(".survey-image");

let loadedImage = surveyImage.currentSrc || surveyImage.src;Where screen capability is concerned, devicePixelRatio tells us the pixel density of a given device’s screen. In the absence of devicePixelRatio, you may safely assume a fallback value of 1:

let dpr = window.devicePixelRatio || 1;devicePixelRatio enjoys excellent browser support. Those few browsers that don’t support it (i.e., IE 10 and under) are highly unlikely to be used on high density displays.

The stalwart getBoundingClientRect method retrieves the rendered width of an img element, while the HTMLImageElement interface’s complete property determines whether an image has finished loaded. The latter of these two is important, because it may be preferable to discard individual results in situations where images haven’t loaded.

In cases where JavaScript isn’t available, we can’t collect any of this data. When we collect ratings from users who have JavaScript turned off (or are otherwise unable to run JavaScript), I have to accept there will be gaps in the data. The basic information we’re still able to collect does provide some value.

Sniffing for WebP support#section6

As you’ll recall, one of the initial questions asked was how users perceived the quality of WebP images. The HTTP Accept request header advertises WebP support in browsers like Chrome. In such cases, the Accept header might look something like this:

Accept: image/webp,image/apng,image/*,*/*;q=0.8As you can see, the WebP content type of image/webp is one of the advertised content types in the header content. In server-side code, you can check Accept for the image/webp substring. Here’s how that might look in Express back-end code:

const WebP = req.get("Accept").indexOf("image/webp") !== -1 ? true : false;In this example, I’m recording the browser’s WebP support status to a JavaScript constant I can use later to modify image delivery. I could use the picture element with multiple sources and let the browser figure out which one to use based on the source element’s type attribute value, but this approach has clear advantages. First, it’s less markup. Second, the survey shouldn’t always choose a WebP source simply because the browser is capable of using it. For any given survey specimen, the app should randomly decide between a WebP or JPEG image. Not all participants using Chrome should rate only WebP images, but rather a random smattering of both formats.

Recording performance API data#section7

You’ll recall that one of the earlier questions I set out to answer was if performance impacts the perception of image quality. At this stage of the web platform’s development, there are several APIs that aid in the search for an answer:

- Navigation Timing API (Level 2): This API tracks performance metrics for page loads. More than that, it gives insight into specific page loading phases, such as redirect, request and response time, DOM processing, and more.

- Navigation Timing API (Level 1): Similar to Level 2 but with key differences. The timings exposed by Level 1 of the API lack the accuracy as those in Level 2. Furthermore, Level 1 metrics are expressed in Unix time. In the survey, data is only collected from Level 1 of the API if Level 2 is unsupported. It’s far from ideal (and also technically obsolete), but it does help fill in small gaps.

- Resource Timing API: Similar to Navigation Timing, but Resource Timing gathers metrics on various loading phases of page resources rather than the page itself. Of the all the APIs used in the survey, Resource Timing is used most, as it helps gather metrics on the loading of the image specimen the user rates.

- Server Timing: In select browsers, this API is brought into the Navigation Timing Level 2 interface when a page request replies with a

Server-Timingresponse header. This header is open-ended and can be populated with timings related to back-end processing phases. This was added to round two of the survey to quantify back-end processing time in general. - Paint Timing API: Currently only in Chrome, this API reports two paint metrics: first paint and first contentful paint. Because a significant slice of users on the web use Chrome, we may be able to observe relationships between perceived image quality and paint metrics.

Using these APIs, we can record performance metrics for most participants. Here’s a simplified example of how the survey uses the Resource Timing API to gather performance metrics for the loaded image specimen:

// Get information about the loaded image

const surveyImageElement = document.querySelector(".survey-image");

const fullImageUrl = surveyImageElement.currentSrc || surveyImageElement.src;

const imageUrlParts = fullImageUrl.split("/");

const imageFilename = imageUrlParts[imageUrlParts.length - 1];

// Check for performance API methods

if ("performance" in window && "getEntriesByType" in performance) {

// Get entries from the Resource Timing API

let resources = performance.getEntriesByType("resource");

// Ensure resources were returned

if (typeof resources === "object" && resources.length > 0) {

resources.forEach((resource) => {

// Check if the resource is for the loaded image

if (resource.name.indexOf(imageFilename) !== -1) {

// Access resource images for the image here

}

});

}

}If the Resource Timing API is available, and the getEntriesByType method returns results, an object with timings is returned, looking something like this:

{

connectEnd: 1156.5999999947962,

connectStart: 1156.5999999947962,

decodedBodySize: 11110,

domainLookupEnd: 1156.5999999947962,

domainLookupStart: 1156.5999999947962,

duration: 638.1000000037602,

encodedBodySize: 11110,

entryType: "resource",

fetchStart: 1156.5999999947962,

initiatorType: "img",

name: "https://imagesurvey.site/img-round-2/1-1024w-c2700e1f2c4f5e48f2f57d665b1323ae20806f62f39c1448490a76b1a662ce4a.webp",

nextHopProtocol: "h2",

redirectEnd: 0,

redirectStart: 0,

requestStart: 1171.6000000014901,

responseEnd: 1794.6999999985565,

responseStart: 1737.0999999984633,

secureConnectionStart: 0,

startTime: 1156.5999999947962,

transferSize: 11227,

workerStart: 0

}I grab these metrics as participants rate images, and store them in a database. Down the road when I want to write queries and analyze the data I have, I can refer to the Processing Model for the Resource and Navigation Timing APIs. With SQL and data at my fingertips, I can measure the distinct phases outlined by the model and see if correlations exist.

Having discussed the technical underpinnings of how data can be collected from survey participants, let’s shift the focus to the survey’s design and user flows.

Designing the survey#section8

Though surveys tend to have straightforward designs and user flows relative to other sites, we must remain cognizant of the user’s path and the impediments a user could face.

The entry point#section9

When participants arrive at the home page, we want to be direct in our communication with them. The home page intro copy greets participants, gives them a succinct explanation of what to expect, and presents two navigation choices:

From here, participants either start the survey or read a privacy policy. If the user decides to take the survey, they’ll reach a page politely asking them what their professional occupation is and requesting them to disclose any eyesight conditions. The fields for these questions can be left blank, as some may not be comfortable disclosing this kind of information. Beyond this point, the survey begins in earnest.

The survey primer#section10

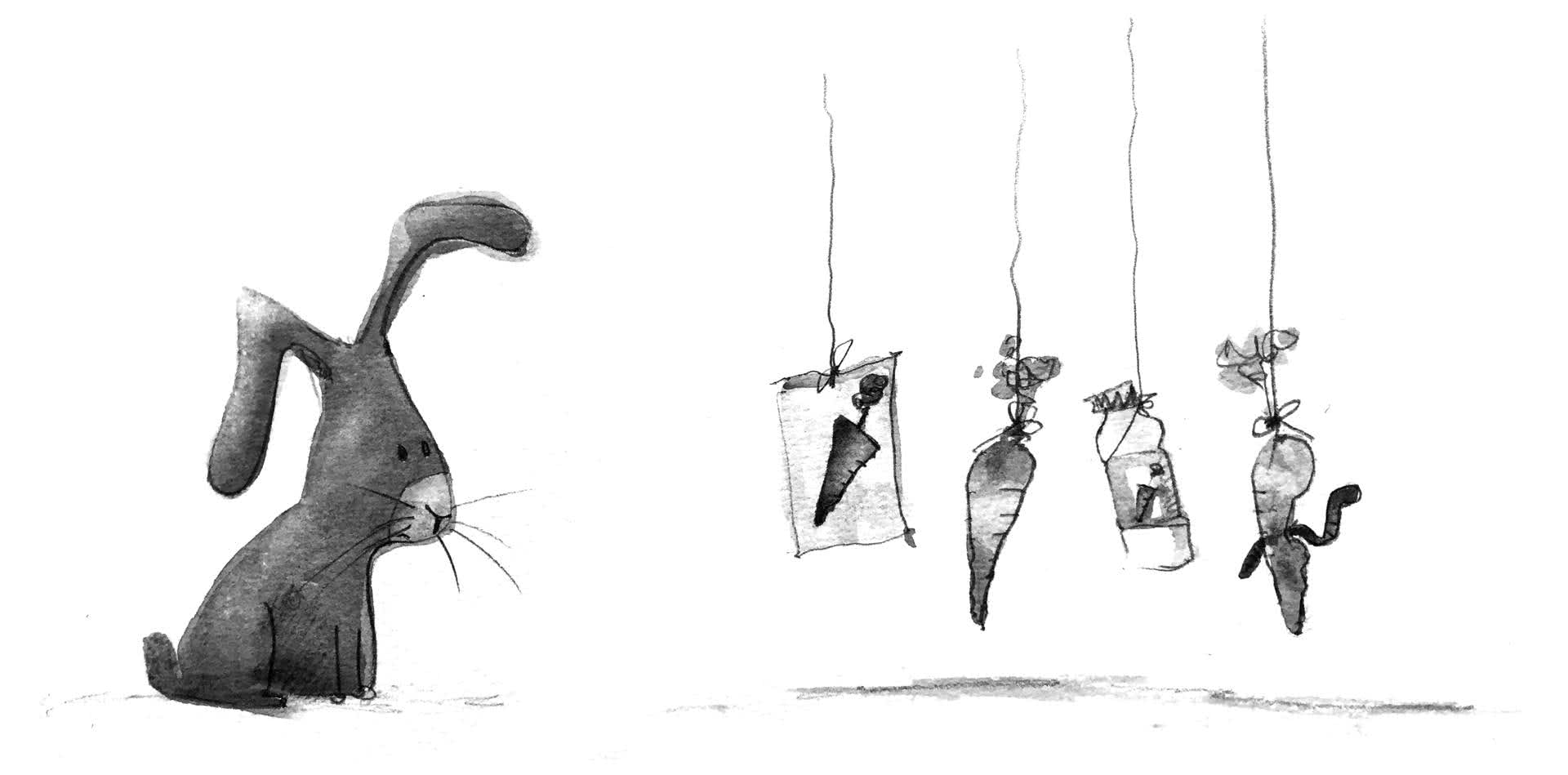

Before the user begins rating images, they’re redirected to a primer page. This page describes what’s expected of participants, and explains how to rate images. While the survey is promoted on design and development outlets where readers regularly work with imagery on the web, a primer is still useful in getting everyone on the same page. The first paragraph of the page stresses that users are rating image quality, not image content. This is important. Absent any context, participants may indeed rate images for their content, which is not what we’re asking for. After this clarification, the concept of lossy image quality is demonstrated with the following diagram:

Lastly, the function of the rating input is explained. This could likely be inferred by most, but the explanatory copy helps remove any remaining ambiguity. Assuming your user knows everything you do is not necessarily wise. What seems obvious to one is not always so to another.

The image specimen page#section11

This page is the main event and is where participants assess the quality of images shown to them. It contains two areas of focus: the image specimen and the input used to rate the image’s quality.

Let’s talk a bit out of order and discuss the input first. I mulled over a few options when it came to which input type to use. I considered a select input with coarsely predefined choices, an input with a type of number, and other choices. What seemed to make the most sense to me, however, was a slider input with a type of range.

A slider input is more intuitive than a text input, or a select element populated with various choices. Because we’re asking for a subjective assessment about something with such a large range of interpretation, a slider allows participants more granularity in their assessments and lends further accuracy to the data collected.

Now let’s talk about the image specimen and how it’s selected by the back-end code. I decided early on in the survey’s development that I wanted images that weren’t prominent in existing stock photo collections. I also wanted uncompressed sources so I wouldn’t be presenting participants with recompressed image specimens. To achieve this, I procured images from a local photographer. The twenty-five images I settled on were minimally processed raw images from the photographer’s camera. The result was a cohesive set of images that felt visually related to each other.

To properly gauge perception across the entire spectrum of quality settings, I needed to generate each image from the aforementioned sources at ninety-six different quality settings ranging from 5 to 100. To account for the varying widths and pixel densities of screens in the wild, each image also needed to be generated at four different widths for each quality setting: 1536, 1280, 1024, and 768 pixels, to be exact. Just the job srcset was made for!

To top it all off, images also needed to be encoded in both JPEG and WebP formats. As a result, the survey draws randomly from 768 images per specimen across the entire quality range, while also delivering the best image for the participant’s screen. This means that across the twenty-five image specimens participants evaluate, the survey draws from a pool of 19,200 images total.

With the conception and design of the survey covered, let’s segue into how the survey was improved by implementing user feedback into the second round.

Listening to feedback#section12

When I launched round one of the survey, feedback came flooding in from designers, developers, accessibility advocates, and even researchers. While my intentions were good, I inevitably missed some important aspects, which made it necessary to conduct a second round. Iteration and refinement are critical to improving the usefulness of a design, and this survey was no exception. When we improve designs with user feedback, we take a project from average to something more memorable. Getting to that point means taking feedback in stride and addressing distinct, actionable items. In the case of the survey, incorporating feedback not only yielded a better user experience, it improved the integrity of the data collected.

Building a better slider input#section13

Though the first round of the survey was serviceable, I ran into issues with the slider input. In round one of the survey, that input looked like this:

There were two recurring complaints regarding this specific implementation. The first was that participants felt they had to align their rating to one of the labels beneath the slider track. This was undesirable for the simple fact that the slider was chosen specifically to encourage participants to provide nuanced assessments.

The second complaint was that the submit button was disabled until the user interacted with the slider. This design choice was intended to prevent participants from simply clicking the submit button on every page without rating images. Unfortunately, this implementation was unintentionally hostile to the user and needed improvement, because it blocked users from rating images without a clear and obvious explanation as to why.

Fixing the problem with the labels meant redesigning the slider as it appeared in Figure 3. I removed the labels altogether to eliminate the temptation of users to align their answers to them. Additionally, I changed the slider background property to a gradient pattern, which further implied the granularity of the input.

The submit button issue was a matter of how users were prompted. In round one the submit button was visible, yet the disabled state wasn’t obvious enough to some. After consulting with a colleague, I found a solution for round two: in lieu of the submit button being initially visible, it’s hidden by some guide copy:

Once the user interacts with the slider and rates the image, a change event attached to the input fires, which hides the guide copy and replaces it with the submit button:

This solution is less ambiguous, and it funnels participants down the desired path. If someone with JavaScript disabled visits, the guide copy is never shown, and the submit button is immediately usable. This isn’t ideal, but it doesn’t shut out participants without JavaScript.

Addressing scrolling woes#section14

The survey page works especially well in portrait orientation. Participants can see all (or most) of the image without needing to scroll. In browser windows or mobile devices in landscape orientation, however, the survey image can be larger than the viewport:

Working with such limited vertical real estate is tricky, especially in this case where the slider needs to be fixed to the bottom of the screen (which addressed an earlier bit of user feedback from round one testing). After discussing the issue with colleagues, I decided that animated indicators in the corners of the page could signal to users that there’s more of the image to see.

When the user hits the bottom of the page, the scroll indicators disappear. Because animations may be jarring for certain users, a prefers-reduced-motion media query is used to turn off this (and all other) animations if the user has a stated preference for reduced motion. In the event JavaScript is disabled, the scrolling indicators are always hidden in portrait orientation where they’re less likely to be useful and always visible in landscape where they’re potentially needed the most.

Avoiding overscaling of image specimens#section15

One issue that was brought to my attention from a coworker was how the survey image seemed to expand boundlessly with the viewport. On mobile devices this isn’t such a problem, but on large screens and even modestly sized high-density displays, images can be scaled excessively. Because the responsive img tag’s srcset attribute specifies a maximum resolution image of 1536w, an image can begin to overscale at as “small” at sizes over 768 pixels wide on devices with a device pixel ratio of 2.

Some overscaling is inevitable and acceptable. However, when it’s excessive, compression artifacts in an image can become more pronounced. To address this, the survey image’s max-width is set to 1536px for standard displays as of round two. For devices with a device pixel ratio of 2 or higher, the survey image’s max-width is set to half that at 768px:

This minor (yet important) fix ensures that images aren’t scaled beyond a reasonable maximum. With a reasonably sized image asset in the viewport, participants will assess images close to or at a given image asset’s natural dimensions, particularly on large screens.

User feedback is valuable. These and other UX feedback items I incorporated improved both the function of the survey and the integrity of the collected data. All it took was sitting down with users and listening to them.

Wrapping up#section16

As round two of the survey gets under way, I’m hoping the data gathered reveals something exciting about the relationship between performance and how people perceive image quality. If you want to be a part of the effort, please take the survey. When round two concludes, keep an eye out here for a summary of the results!

Thank you to those who gave their valuable time and feedback to make this article as good as it could possibly be: Aaron Gustafson, Jeffrey Zeldman, Brandon Gregory, Rachel Andrew, Bruce Hyslop, Adrian Roselli, Meg Dickey-Kurdziolek, and Nick Tucker.

Additional thanks to those who helped improve the image quality survey: Mandy Tensen, Darleen Denno, Charlotte Dann, Tim Dunklee, and Thad Roe.

FYI – the past-tense of “lead” is “led”.

Very interesting read. I personally didn’t have a problem with any of the images shown. I wonder if those that see more artifacts work in low-light conditions with well calibrated screens?

My Optometrist is always telling me how great my eyes are and the only people that ever complain to me are users of expensive hardware with glasses (Irony). I’ve never understood it and generally just go with showing them their load times on full-fat images vs how I’ve configured.

Know the COMPLETE and DEFINITIVE guide to symptoms, causes and alternative treatments for BRONCHITE. Go to: https://saudebelezaebemestar.com/o-que-e-bom-para-bronquite/

Wow. Very nice write-up about the complexities of a study to answer what at first sight seem to be straightforward questions. I hope you’ll write about the results, too.

Do you think there are effects like anchoring or other biases you need to factor into your analysis, or do you think those even themselves out, given a large enough number of participants? For example, I might rate the next picture lower when seeing it after a high-quality picture than when seeing it after a low-quality picture. Some pictures might be systematically rated higher or lower quality because of their “photographic quality” (choice of focus, exposure, etc.).

Siempre es agradable leer comentarios de interes, muchas gracias gente por compartir!!! https://gresitepiscinas.com/cenefas-piscinas/

Great research.. Nice to read this article.. Job seekers must check Dubai airport jobs in ground staff and other engineering fields.

Wow, this article is so useful, but I wanted to share another one that is not to be underestimated http://essaypride.com/essays.php?free_essay=619047&title=Text-Design-Char-Style

It talks more about text design, but still discussed tons of similar issues.

adfa

I have a few narrative essay examples that you can use for your blog posts. Cheers!

Wow, this was really interesting. I like the image quality survey you set up. Hope all the data helps you solve the “my image looks bad” dilemma and you can publish more awesome content about the results.

Great article Jeremy Wagner you have shared such a important information about image quality. Thank you for the info Website Designing Company in Delhi.

Great article Jeremy. No doubt simple and easy to read.

Thanks!!

i wrote about this fact in my blog here

Design is a first impression of your website or application for user that’s why its important, and to get review from user can really help getting good looking website, that’s what we did when launching first ERP Software design publicly